An A/B Test In Slow Motion: Before Your Experiment Becomes Significant

Looking at the final snapshot of any experiment result, the liveliness of its effects are completely rendered invisible. The reality of any a/b test is quite dynamic and anyone who looks at experiments in real-time will know this. Understanding these subtle dynamics might be useful to anyone looking at test results. And so I’ve slowed down one such positive experiment result, along with its various time frames. My hope is that this might shed some light on what to expect from your data as you run a/b tests.

Note About The Experiment

One important mention about this experiment is that it's a positive one based on the highly repeatable Canned Response pattern. We know that it was a strong likelihood likely p (with multiple positive experiments combining together for a higher degree of prediction). If this were a flat experiment (with no or very tiny insignificant effects), we could also expect way more up and down movement.

The Observations

- Effects Fluctuate - Especially In The Beginning

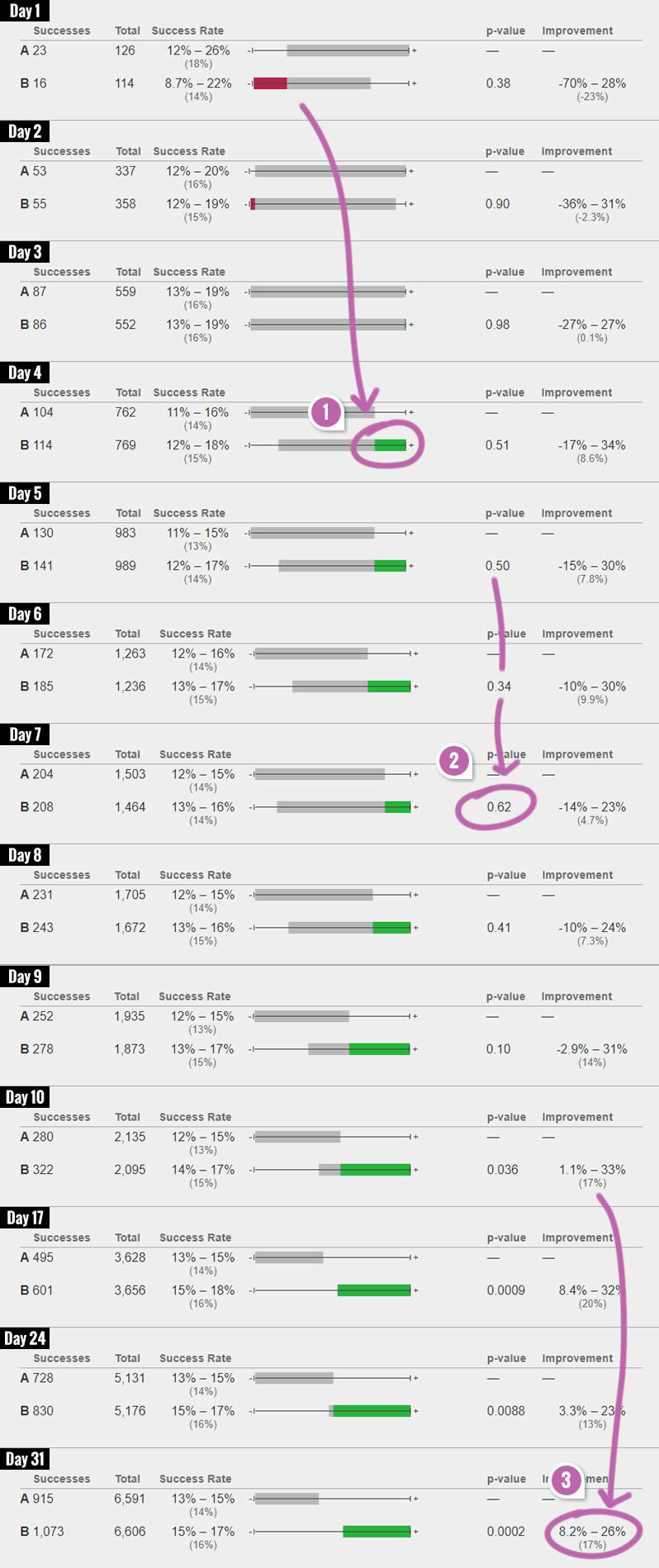

Early experiment results and their effects may be extremely misleading. Effects at this point fluctuate quite often with what might seem negative turning around into the positive and vice versa. This is very normal behavior which can also be seen in our experiment above. Comparing day 1 and day 4, our effects have completely reversed. More so, in the early days of the experiment, the effect range is also more drastic. In the first 10 days of the experiment we can observe effects ranging from -23% all the way to +17%. Whereas in between days 10 to 31, the effect stabilises between +20% and +13% (again keep in mind that this is a positive experiment at the end of the day). - P-Values Fluctuate - Also More In The Beginning

In the beginning of any experiment, it's also common for p-values to move up and down dramatically (reminder: p-values are a measure of statistical significance - the higher the number, the less likely that A and B are any different). As a p-value decreases (significance rises), it does not mean that it will continue to do so. If we simply look days 5, 6 and 7, one can easily spot time frames of such significance reversal. However, at the same time, given enough sample (visitors or test participants), if there is a true effect, the p-value should continue its downward trend (as visible in the later stages of the experiment). - Confidence Intervals Tighten

Finally, if we're looking at an experiment with a true effect, then as more data is accumulated, our confidence intervals will also narrow. This essentially means that we can be more confident that statistically our effect falls more or less within a range. For example, both days 10 and 31 have the same +17% effect, but the latter confidence interval is way more defined ranging between 8.2% and 26% (as opposed the wider 1.1% and 33% earlier range).

Comments

Ahinton 6 years ago ↑0↓0

Thanks for this. If one was comfortable with 95% stat sig, what would stop them from ending the experiment at Day 10? Likewise if you were after 99% it appears you achieve that at Day 17. Was there a predetermined sample size you were waiting on?

Also in the image caption am I understanding correctly that while you observed a 17% improvement in TY page visits, that only translated to a 1% increase in sales?

Reply

Jakub Linowski 6 years ago ↑0↓0

Hello. In this experiment we wanted (and had the privilege of) gaining more confidence - and so we ran the test longer. Given that p-values fluctuate, a threshold of 0.05 or 0.01 as a stopping rule usually introduces a lot more false positives. We were less concerned with a predetermined sample size, more with giving ourselves 2-4 weeks (actually 1 full month) on this one.

As far as the relative 17% gain, yes, that turned out to be approximately an absolute gain of 2% more leads in this experiment.

Reply

Stephan Becker 6 years ago ↑0↓0

Very instructive indeed. I'm wondering looking at the screenshot. Is that a tool that we can purchase somewhere and that shows us a b test results through time day by day? If so what's tool would you recommend? Thanks a great lot

Reply

Jakub Linowski 6 years ago ↑1↓0

Hi Stephan,

I was using Abba from Thumbtack and copied the data by hand :) Available here: http://thumbtack.github.io/abba/demo/abba.html

Best,

Jakub

Reply

Ilincev 6 years ago ↑1↓0

Would be great to see a negative and inconclusive A/B tests as well.

Reply